Source of the problem: AI’s struggle to get the story straight

As OpenAI, Google and Microsoft inject AI into every corner of the internet, quality journalism is once again at threat of extinction.

A growing number of media companies – including The Washington Post, Politico and Business Insider – struck a deal with OpenAI, charging the startup to include their articles into its responses. But as it stands, ChatGPT fails at supporting its partners.

A 2024 study found that current LLMs, including those with RAG capabilities, often don’t support their responses with accurate and relevant sources. What’s worse, they tend to make stuff up, attributing fake quotes to real people and incorrectly summarizing stories.

AI companies promise to be better, though. OpenAI claims that ChatGPT and its other products will eventually provide links, give credit, and direct readers to the websites of media partners. Perplexity Chief Business Officer, Dmitry Shevelenko said: “We need web publishers to keep creating great journalism that is loaded up with facts, because you can’t answer questions well if you don’t have accurate source material.”

The truth is, AI companies are imagining a future where their platforms become the primary means through which everyone accesses information on the internet. But they are still long ways to go before they’re able to challenge Google’s dominance in the online search market.

On July 26, 2024, OpenAI unveiled SearchGPT, a prototype search engine that melds traditional search functionalities with the advanced capabilities of generative AI.

The service, powered by the GPT-4 family of models, will initially be available to only 10,000 test users, according to OpenAI spokesperson Kayla Wood, who shared the information with The Verge.

But even before users have had a chance to test it out, SearchGPT appears to be misinforming.

OopsGPT

In a prerecorded demo video released alongside the announcement, a mock user types “music festivals in Boone, North Carolina in August” into the SearchGPT interface.

The tool generates a list of festivals supposedly taking place in Boone that month, with the first being An Appalachian Summer Festival.

According to SearchGPT, this festival hosts a series of arts events from July 29 to August 16. However, anyone in Boone looking to buy tickets to one of these concerts would encounter issues.

The festival actually began on June 29 and concludes with its final concert on July 27. The dates from July 29 to August 16 refer to the period when the festival’s box office will be officially closed, as confirmed by the festival’s box office.

In theory, better AI search products could improve readership at a time when Google and other search engines highlight quick snippets or summaries instead of directing users to the full articles on the original news sites. But at the moment, startups in this space struggle to do that with any consistency.

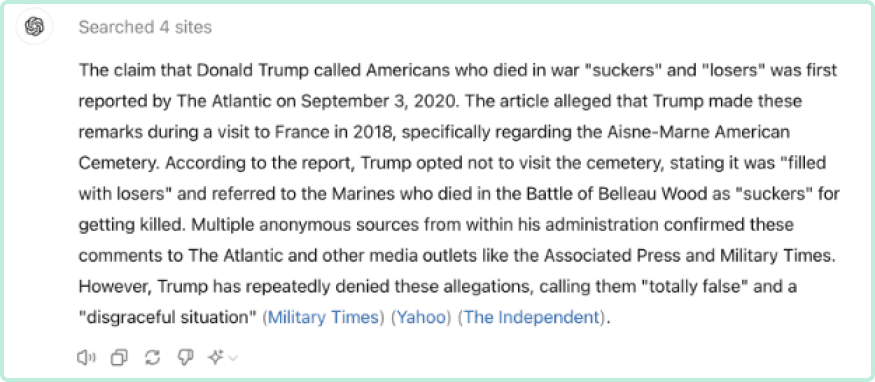

For example, if you ask ChatGPT about Donald Trump calling Americans who’d died in war “suckers” or “losers”, it will correctly name The Atlantic as the outlet that first reported the story. But Instead of linking to the original source material, it will direct you to secondary sources such as Military Times, Yahoo and The Independent.

When asked to give a source to the original article, ChatGPT provided a non-functional link.

These issues are not entirely new. Human-run websites have long harvested and repurposed original reporting to create derivative articles optimized for search engines or social media.

When ChatGPT references an aggregated Yahoo News piece instead of the original source, it mimics the behavior of traditional search engines like Google, which often overlook the original scoop.

This practice predates the internet, with newspapers and magazines historically aggregating competitors’ stories. However, companies like OpenAI, Microsoft, Google, and Perplexity have pledged that their AI products will provide proper citations and drive traffic to original publishers.

The reality is, AI models are unlikely to ever be perfect at finding and citing information. Firstly, they are trained on extensive datasets without metadata, making it hard to trace the origins of specific information.

Additionally, they lack the deep contextual understanding necessary to assess the reliability and relevance of sources accurately. The algorithmic design of these models prioritizes generating coherent text over maintaining accurate citation trails.

Until OpenAI and other generative AI companies build better source attribution models, billions of internet users are left with the response they get – no sources cited.

Are you a news reporter or an investigative journalist? See how Seraf can supercharge your work.

Get valuable insights about AI and business automation

Rely on Seraf, AI that consolidates all your systems